Back معيار استبعاد الروبوتات Arabic Robots.txt Azerbaijani Robots Exclusion Standard BAR Protocol d'exclusió de robots Catalan Robots.txt Czech Robot Exclusion Standard Danish Robots Exclusion Standard German Estándar de exclusión de robots Spanish استاندارد استثناء کردن رباتها Persian Robots.txt Finnish

| Robots Exclusion Protocol | |

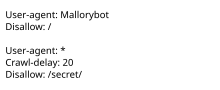

Example of a simple robots.txt file, indicating that a user-agent called "Mallorybot" is not allowed to crawl any of the website's pages, and that other user-agents cannot crawl more than one page every 20 seconds, and are not allowed to crawl the "secret" folder | |

| Status | Proposed Standard |

|---|---|

| First published | 1994 published, formally standardized in 2022 |

| Authors |

|

| Website | robotstxt |

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit.

The standard, developed in 1994, relies on voluntary compliance. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with security through obscurity. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate server overload. In the 2020s many websites began denying bots that collect information for generative artificial intelligence.[citation needed]

The "robots.txt" file can be used in conjunction with sitemaps, another robot inclusion standard for websites.