Back Scheinbare Helligkeit ALS قدر ظاهري Arabic Magnitú aparente AST Görünən ulduz ölçüsü Azerbaijani Бачная зорная велічыня Byelorussian Видима звездна величина Bulgarian আপাত মান Bengali/Bangla Prividna magnituda BS Magnitud aparent Catalan Hvězdná velikost Czech

Apparent magnitude (m) is a measure of the brightness of a star, astronomical object or other celestial objects like artificial satellites. Its value depends on its intrinsic luminosity, its distance, and any extinction of the object's light caused by interstellar dust along the line of sight to the observer.

Unless stated otherwise, the word magnitude in astronomy usually refers to a celestial object's apparent magnitude. The magnitude scale likely dates to before the ancient Roman astronomer Claudius Ptolemy, whose star catalog popularized the system by listing stars from 1st magnitude (brightest) to 6th magnitude (dimmest).[1] The modern scale was mathematically defined to closely match this historical system by Norman Pogson in 1856.

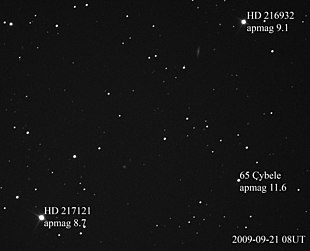

The scale is reverse logarithmic: the brighter an object is, the lower its magnitude number. A difference of 1.0 in magnitude corresponds to the brightness ratio of , or about 2.512. For example, a magnitude 2.0 star is 2.512 times as bright as a magnitude 3.0 star, 6.31 times as magnitude 4.0, and 100 times magnitude 7.0.

The brightest astronomical objects have negative apparent magnitudes: for example, Venus at −4.2 or Sirius at −1.46. The faintest stars visible with the naked eye on the darkest night have apparent magnitudes of about +6.5, though this varies depending on a person's eyesight and with altitude and atmospheric conditions.[2] The apparent magnitudes of known objects range from the Sun at −26.832 to objects in deep Hubble Space Telescope images of magnitude +31.5.[3]

The measurement of apparent magnitude is called photometry. Photometric measurements are made in the ultraviolet, visible, or infrared wavelength bands using standard passband filters belonging to photometric systems such as the UBV system or the Strömgren uvbyβ system. Measurement in the V-band may be referred to as the apparent visual magnitude.

Absolute magnitude is a related quantity which measures the luminosity that a celestial object emits, rather than its apparent brightness when observed, and is expressed on the same reverse logarithmic scale. Absolute magnitude is defined as the apparent magnitude that a star or object would have if it were observed from a distance of 10 parsecs (33 light-years; 3.1×1014 kilometres; 1.9×1014 miles). Therefore, it is of greater use in stellar astrophysics since it refers to a property of a star regardless of how close it is to Earth. But in observational astronomy and popular stargazing, references to "magnitude" are understood to mean apparent magnitude.

Amateur astronomers commonly express the darkness of the sky in terms of limiting magnitude, i.e. the apparent magnitude of the faintest star they can see with the naked eye. This can be useful as a way of monitoring the spread of light pollution.

Apparent magnitude is technically a measure of illuminance, which can also be measured in photometric units such as lux.[4]

- ^ Toomer, G. J. (1984). Ptolemy's Almagest. New York: Springer-Verlag. p. 16. ISBN 0-387-91220-7.

- ^ Curtis, Heber Doust (1903) [1901-03-27]. "On the Limits of Unaided Vision". Lick Observatory Bulletin. 2 (38). University of California: 67–69. Bibcode:1903LicOB...2...67C. doi:10.5479/ADS/bib/1903LicOB.2.67C.

- ^ Matthew, Templeton (21 October 2011). "Magnitudes: Measuring the Brightness of Stars". American Association of Variable Stars (AAVSO). Archived from the original on 18 May 2019. Retrieved 19 May 2019.

- ^ Crumey, A. (October 2006). "Human Contrast Threshold and Astronomical Visibility". Monthly Notices of the Royal Astronomical Society. 442 (3): 2600–2619. arXiv:1405.4209. Bibcode:2014MNRAS.442.2600C. doi:10.1093/mnras/stu992.

![{\displaystyle {\sqrt[{5}]{100}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d26eff2c044a521107db3cf827e04c2b8415691d)