Back معامل التحديد Arabic Coeficient de determinació Catalan Koeficient determinace Czech Bestimmtheitsmaß German Coeficiente de determinación Spanish Mugatze-koefiziente Basque ضریب تعیین Persian Coefficient de détermination French Coefficiente di determinazione Italian 決定係数 Japanese

You can help expand this article with text translated from the corresponding article in German. (September 2019) Click [show] for important translation instructions.

|

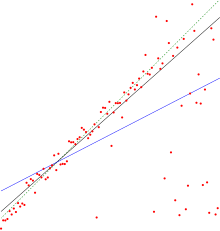

In statistics, the coefficient of determination, denoted R2 or r2 and pronounced "R squared", is the proportion of the variation in the dependent variable that is predictable from the independent variable(s).

It is a statistic used in the context of statistical models whose main purpose is either the prediction of future outcomes or the testing of hypotheses, on the basis of other related information. It provides a measure of how well observed outcomes are replicated by the model, based on the proportion of total variation of outcomes explained by the model.[1][2][3]

There are several definitions of R2 that are only sometimes equivalent. One class of such cases includes that of simple linear regression where r2 is used instead of R2. When only an intercept is included, then r2 is simply the square of the sample correlation coefficient (i.e., r) between the observed outcomes and the observed predictor values.[4] If additional regressors are included, R2 is the square of the coefficient of multiple correlation. In both such cases, the coefficient of determination normally ranges from 0 to 1.

There are cases where R2 can yield negative values. This can arise when the predictions that are being compared to the corresponding outcomes have not been derived from a model-fitting procedure using those data. Even if a model-fitting procedure has been used, R2 may still be negative, for example when linear regression is conducted without including an intercept,[5] or when a non-linear function is used to fit the data.[6] In cases where negative values arise, the mean of the data provides a better fit to the outcomes than do the fitted function values, according to this particular criterion.

The coefficient of determination can be more intuitively informative than MAE, MAPE, MSE, and RMSE in regression analysis evaluation, as the former can be expressed as a percentage, whereas the latter measures have arbitrary ranges. It also proved more robust for poor fits compared to SMAPE on certain test datasets.[7]

When evaluating the goodness-of-fit of simulated (Ypred) versus measured (Yobs) values, it is not appropriate to base this on the R2 of the linear regression (i.e., Yobs= m·Ypred + b).[citation needed] The R2 quantifies the degree of any linear correlation between Yobs and Ypred, while for the goodness-of-fit evaluation only one specific linear correlation should be taken into consideration: Yobs = 1·Ypred + 0 (i.e., the 1:1 line).[8][9]

- ^ Steel, R. G. D.; Torrie, J. H. (1960). Principles and Procedures of Statistics with Special Reference to the Biological Sciences. McGraw Hill.

- ^ Glantz, Stanton A.; Slinker, B. K. (1990). Primer of Applied Regression and Analysis of Variance. McGraw-Hill. ISBN 978-0-07-023407-9.

- ^ Draper, N. R.; Smith, H. (1998). Applied Regression Analysis. Wiley-Interscience. ISBN 978-0-471-17082-2.

- ^ Devore, Jay L. (2011). Probability and Statistics for Engineering and the Sciences (8th ed.). Boston, MA: Cengage Learning. pp. 508–510. ISBN 978-0-538-73352-6.

- ^ Barten, Anton P. (1987). "The Coeffecient of Determination for Regression without a Constant Term". In Heijmans, Risto; Neudecker, Heinz (eds.). The Practice of Econometrics. Dordrecht: Kluwer. pp. 181–189. ISBN 90-247-3502-5.

- ^ Colin Cameron, A.; Windmeijer, Frank A.G. (1997). "An R-squared measure of goodness of fit for some common nonlinear regression models". Journal of Econometrics. 77 (2): 1790–2. doi:10.1016/S0304-4076(96)01818-0.

- ^ Chicco, Davide; Warrens, Matthijs J.; Jurman, Giuseppe (2021). "The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation". PeerJ Computer Science. 7 (e623): e623. doi:10.7717/peerj-cs.623. PMC 8279135. PMID 34307865.

- ^ Legates, D.R.; McCabe, G.J. (1999). "Evaluating the use of "goodness-of-fit" measures in hydrologic and hydroclimatic model validation". Water Resour. Res. 35 (1): 233–241. Bibcode:1999WRR....35..233L. doi:10.1029/1998WR900018. S2CID 128417849.

- ^ Ritter, A.; Muñoz-Carpena, R. (2013). "Performance evaluation of hydrological models: statistical significance for reducing subjectivity in goodness-of-fit assessments". Journal of Hydrology. 480 (1): 33–45. Bibcode:2013JHyd..480...33R. doi:10.1016/j.jhydrol.2012.12.004.