Back توزيع أسي Arabic Distribución esponencial AST Паказнікавае размеркаванне Byelorussian সূচকীয় বিন্যাস Bengali/Bangla Distribució exponencial Catalan Exponenciální rozdělení Czech Exponentialverteilung German Εκθετική κατανομή Greek Distribución exponencial Spanish Eksponentjaotus Estonian

|

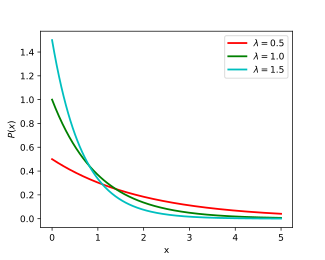

Probability density function  | |||

|

Cumulative distribution function  | |||

| Parameters | rate, or inverse scale | ||

|---|---|---|---|

| Support | |||

| CDF | |||

| Quantile | |||

| Mean | |||

| Median | |||

| Mode | |||

| Variance | |||

| Skewness | |||

| Excess kurtosis | |||

| Entropy | |||

| MGF | |||

| CF | |||

| Fisher information | |||

| Kullback–Leibler divergence | |||

| Expected shortfall | |||

In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the distance between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate; the distance parameter could be any meaningful mono-dimensional measure of the process, such as time between production errors, or length along a roll of fabric in the weaving manufacturing process.[1] It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless.[2] In addition to being used for the analysis of Poisson point processes it is found in various other contexts.[3]

The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions.[3]

- ^ "7.2: Exponential Distribution". Statistics LibreTexts. 2021-07-15. Retrieved 2024-10-11.

- ^ "Exponential distribution | mathematics | Britannica". www.britannica.com. Retrieved 2024-10-11.

- ^ a b Weisstein, Eric W. "Exponential Distribution". mathworld.wolfram.com. Retrieved 2024-10-11.