Back Markovketting Afrikaans سلسلة ماركوف Arabic Cadena de Márkov AST Марковска верига Bulgarian Cadena de Màrkov Catalan Markovův řetězec Czech Markov-kæde Danish Markow-Kette German Αλυσίδα Μάρκοφ Greek Cadena de Márkov Spanish

| Part of a series on statistics |

| Probability theory |

|---|

|

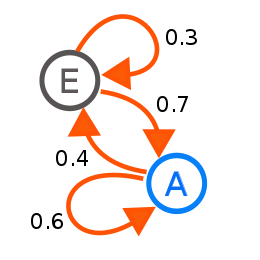

A Markov chain or Markov process is a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs now." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). Markov processes are named in honor of the Russian mathematician Andrey Markov.

Markov chains have many applications as statistical models of real-world processes.[1] They provide the basis for general stochastic simulation methods known as Markov chain Monte Carlo, which are used for simulating sampling from complex probability distributions, and have found application in areas including Bayesian statistics, biology, chemistry, economics, finance, information theory, physics, signal processing, and speech processing.[1][2][3]

The adjectives Markovian and Markov are used to describe something that is related to a Markov process.[4]

- ^ a b Sean Meyn; Richard L. Tweedie (2 April 2009). Markov Chains and Stochastic Stability. Cambridge University Press. p. 3. ISBN 978-0-521-73182-9.

- ^ Reuven Y. Rubinstein; Dirk P. Kroese (20 September 2011). Simulation and the Monte Carlo Method. John Wiley & Sons. p. 225. ISBN 978-1-118-21052-9.

- ^ Dani Gamerman; Hedibert F. Lopes (10 May 2006). Markov Chain Monte Carlo: Stochastic Simulation for Bayesian Inference, Second Edition. CRC Press. ISBN 978-1-58488-587-0.

- ^ "Markovian". Oxford English Dictionary (Online ed.). Oxford University Press. (Subscription or participating institution membership required.)