Back Rectificador (xarxes neurals) Catalan ReLU Czech Rectifier (neuronale Netzwerke) German Rectificador (redes neuronales) Spanish یکسوساز (شبکه عصبی) Persian Redresseur (réseaux neuronaux) French Rettificatore (reti neurali) Italian 正規化線形関数 Japanese ReLU Korean Исправљач (неуронске мреже) Serbian

| Part of a series on |

| Machine learning and data mining |

|---|

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function[1][2] is an activation function defined as the non-negative part of its argument:

where is the input to a neuron. This is also known as a ramp function and is analogous to half-wave rectification in electrical engineering.

ReLU is one of the most popular activation function for artificial neural networks,[3] and finds application in computer vision[4] and speech recognition[5][6] using deep neural nets and computational neuroscience.[7][8][9]

It was first used by Alston Householder in 1941 as a mathematical abstraction of biological neural networks.[10] It was introduced by Kunihiko Fukushima in 1969 in the context of visual feature extraction in hierarchical neural networks.[11][12] It was later argued that it has strong biological motivations and mathematical justifications.[13][14] In 2011,[4] ReLU activation enabled training deep supervised neural networks without unsupervised pre-training, compared to the widely used activation functions prior to 2011, e.g., the logistic sigmoid (which is inspired by probability theory; see logistic regression) and its more practical[15] counterpart, the hyperbolic tangent.

- ^ Brownlee, Jason (8 January 2019). "A Gentle Introduction to the Rectified Linear Unit (ReLU)". Machine Learning Mastery. Retrieved 8 April 2021.

- ^ Liu, Danqing (30 November 2017). "A Practical Guide to ReLU". Medium. Retrieved 8 April 2021.

- ^ Ramachandran, Prajit; Barret, Zoph; Quoc, V. Le (October 16, 2017). "Searching for Activation Functions". arXiv:1710.05941 [cs.NE].

- ^ a b Xavier Glorot; Antoine Bordes; Yoshua Bengio (2011). Deep sparse rectifier neural networks (PDF). AISTATS.

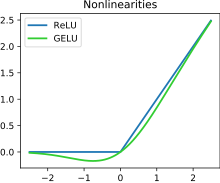

Rectifier and softplus activation functions. The second one is a smooth version of the first.

- ^ László Tóth (2013). Phone Recognition with Deep Sparse Rectifier Neural Networks (PDF). ICASSP.

- ^ Andrew L. Maas, Awni Y. Hannun, Andrew Y. Ng (2014). Rectifier Nonlinearities Improve Neural Network Acoustic Models.

- ^ Hansel, D.; van Vreeswijk, C. (2002). "How noise contributes to contrast invariance of orientation tuning in cat visual cortex". J. Neurosci. 22 (12): 5118–5128. doi:10.1523/JNEUROSCI.22-12-05118.2002. PMC 6757721. PMID 12077207.

- ^ Kadmon, Jonathan; Sompolinsky, Haim (2015-11-19). "Transition to Chaos in Random Neuronal Networks". Physical Review X. 5 (4): 041030. arXiv:1508.06486. Bibcode:2015PhRvX...5d1030K. doi:10.1103/PhysRevX.5.041030. S2CID 7813832.

- ^ Engelken, Rainer; Wolf, Fred; Abbott, L. F. (2020-06-03). "Lyapunov spectra of chaotic recurrent neural networks". arXiv:2006.02427 [nlin.CD].

- ^ Householder, Alston S. (June 1941). "A theory of steady-state activity in nerve-fiber networks: I. Definitions and preliminary lemmas". The Bulletin of Mathematical Biophysics. 3 (2): 63–69. doi:10.1007/BF02478220. ISSN 0007-4985.

- ^ Fukushima, K. (1969). "Visual feature extraction by a multilayered network of analog threshold elements". IEEE Transactions on Systems Science and Cybernetics. 5 (4): 322–333. doi:10.1109/TSSC.1969.300225.

- ^ Fukushima, K.; Miyake, S. (1982). "Neocognitron: A Self-Organizing Neural Network Model for a Mechanism of Visual Pattern Recognition". Competition and Cooperation in Neural Nets. Lecture Notes in Biomathematics. Vol. 45. Springer. pp. 267–285. doi:10.1007/978-3-642-46466-9_18. ISBN 978-3-540-11574-8.

{{cite book}}:|journal=ignored (help) - ^ Hahnloser, R.; Sarpeshkar, R.; Mahowald, M. A.; Douglas, R. J.; Seung, H. S. (2000). "Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit". Nature. 405 (6789): 947–951. Bibcode:2000Natur.405..947H. doi:10.1038/35016072. PMID 10879535. S2CID 4399014.

- ^ Hahnloser, R.; Seung, H. S. (2001). Permitted and Forbidden Sets in Symmetric Threshold-Linear Networks. NIPS 2001.

- ^ Yann LeCun; Leon Bottou; Genevieve B. Orr; Klaus-Robert Müller (1998). "Efficient BackProp" (PDF). In G. Orr; K. Müller (eds.). Neural Networks: Tricks of the Trade. Springer.